In the biblical tale of David and Goliath, a small, seemingly underpowered challenger takes down a giant through precision, skill, and agility. A similar dynamic is emerging in the field of generative AI. Ever since the launch of ChatGPT, we’ve been sold on the illusion that those who have the bigger AI models will dominate the AI space. Now, mainstream LLMs such as ChatGPT face a sobering reality check - as we engage with clients, we hear more and more stories about LLM limitations and risks come to life.

Our answer? Every company needs to find their own, unique way to AI success, and Small Language Models (SLMs) can be a leaner alternative to their larger counterparts. They offer companies a more efficient, affordable, and custom way to harness the potential of AI.

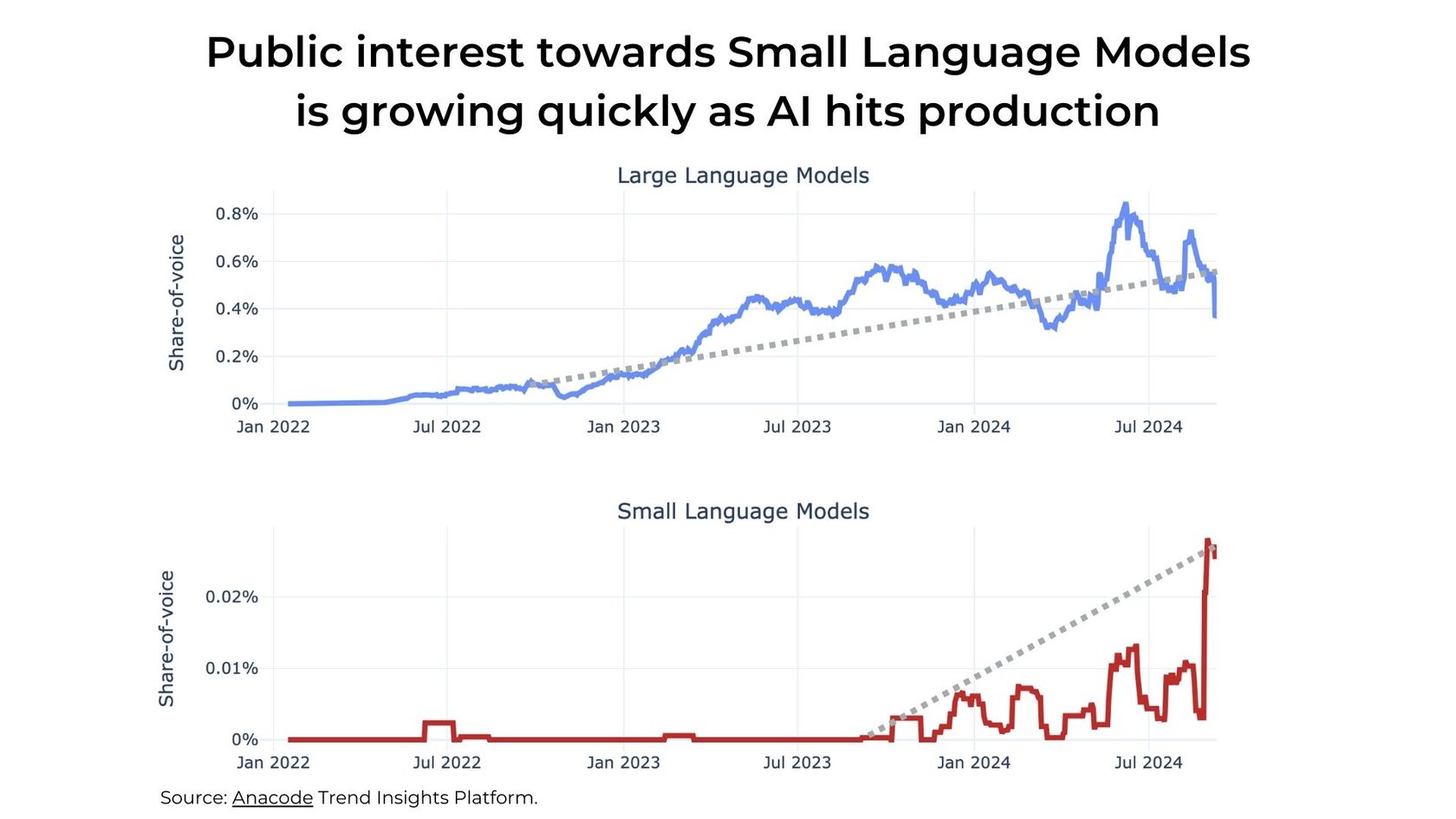

Figure1: Public interest towards SLMs is quickly increasing

Unpacking the power of Small Language Models

LLMs are trained on data crawled from the Web - at a scale which makes it difficult to curate the data or even just understand what’s in it. This lack of transparency and control is also a major adoption barrier for companies. By contrast, SLMs are designed to operate with less data and fewer parameters, offering speed, agility, and accuracy on specific tasks. Constrained to deliver high quality with a smaller parameter count, their developers need to get creative with the rest - namely, the training data composition and the architecture of the model.

For instance, Microsoft's Phi-2, a model with just 2.7 billion parameters, can run on mobile devices while still delivering performance comparable to much larger models like Llama 2. This is achieved through an efficient training process that uses high-quality datasets and fewer resources, making these models accessible to businesses without the need for extensive cloud infrastructure (Analyticsdrift).

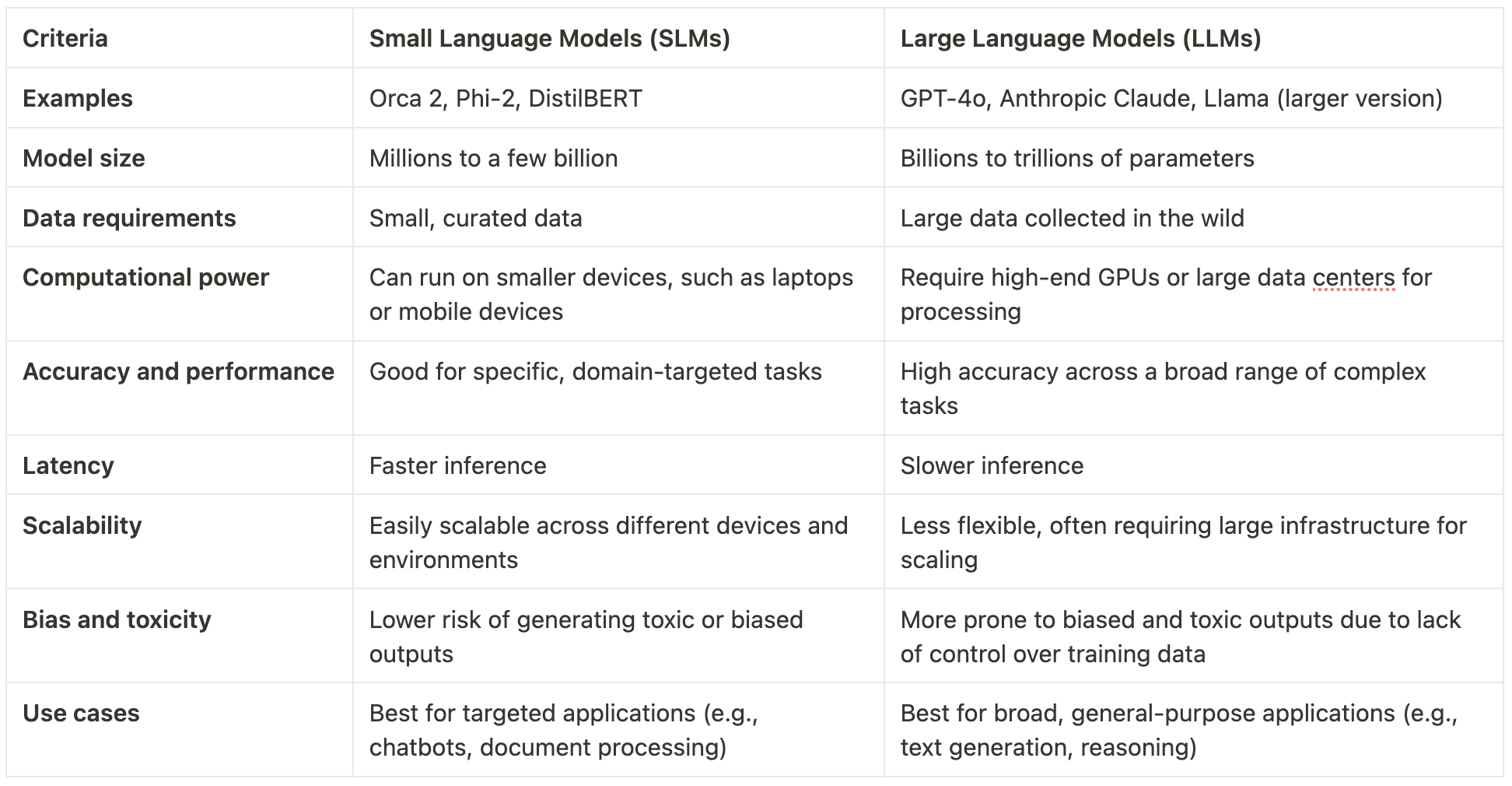

The following table summarizes how SLMs compare to their larger counterparts:

Table 1: Comparing SLMs vs. LLMs

Small Language Models offer more control and versatility

The technological innovation behind Small Language Models lies in their ability to break down complex neural networks into smaller, interpretable components. By "decomposing" the layers of a neural network into thousands of distinct features, SLMs can more precisely capture and control behavior in natural language processing tasks (Interesting Engineering). This enables these models to excel at specific functions such as translation, content generation, and text summarization—all while remaining faster and more cost-efficient than their larger counterparts.

One of the standout benefits of SLMs is their versatility. Whether used for intelligent document processing to streamline logistics or for generating code suggestions in real-time, these models have broad applications across industries. For example, companies in healthcare have adopted SLMs to assist with autonomous medical coding and improve the accuracy of diagnostic tools, while legal teams rely on them for contract analysis and compliance tracking (AIMagazine).

SLMs also address several key challenges businesses face with larger models, such as bias and fairness in outputs. By focusing on high-quality, domain-specific training data, these models are less prone to errors or hallucinations, providing more trustworthy results (AI Journal). For companies with privacy concerns, SLMs can run on edge devices, reducing reliance on cloud data centers and enhancing security (Salesforce).

Small Language Models are a long-term trend

As the AI landscape evolves, Small Language Models naturally align with a bunch of other trends. One of the most significant trends is the move towards multimodal models that integrate text, image, and even video processing. For example, Microsoft's Phi-3 Vision model enables users to input images and text and receive contextual responses, highlighting the potential for SLMs in sectors like customer service and creative industries.

Moreover, the rise of on-device AI is another major trend driving the adoption of SLMs. Rather than relying on remote data centers, companies are increasingly deploying AI models directly on devices such as laptops and mobile phones. This not only reduces operational costs but also enhances speed and security by keeping data processing local (Gizmodo). This shift is especially critical in industries where data privacy and rapid decision-making are paramount, such as finance, healthcare, and defense.

Looking forward, sustainability is becoming an essential consideration in AI development. Small Language Models are far more energy-efficient than larger models, making them an attractive option for businesses seeking to minimize their carbon footprint while still leveraging cutting-edge AI capabilities.

The future of AI - small, smart, and scalable

As AI technology continues to evolve, the future belongs to models that can strike a balance between power and efficiency. SLMs allow you to implement specialized, cost-effective solutions that are easy to implement and scale.

Anacode can become your trusted partner in implementing a unique AI strategy built around tailored models. Our AI journey started long before the launch of ChatGPT - for years, training, optimizing, and deploying custom models was our “home turf.” We know that our customers only get so far with generic AI solutions - thus, our focus is always on an individual approach and scalability, ensuring that each model is sharply tuned to meet specific user needs.

Would you like to brainstorm which of your own AI use cases and challenges can be addressed with SLMs? Contact us today to explore possible solutions and shape a winning AI strategy!